Deep image reconstruction from human brain activity

Research Paper | Short Note

Table of contents

Outline

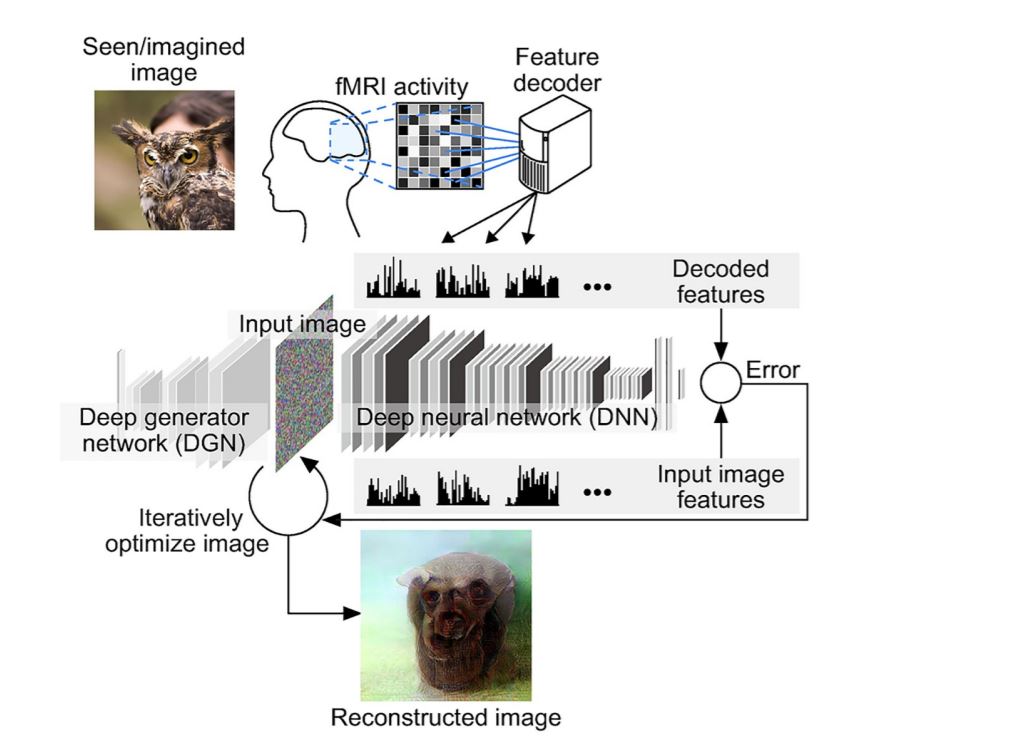

This research paper explains and compares the way humans perceive images and try to replicate them through Deep Neural networks (DNN). The basic idea here is to read the thoughts of humans through fMRI and then visualize them to create or predict an image.

So, it will have a human look at a picture as the example image here shows, that it will measure the MRI activity then will use a feature decoder to map that MRI activity to features of a deep neural network and then will reconstruct the image that is closest to those features in the neural network and by reconstruction they get out an image of what the human sees

Process Flow

The fMRI machine measure the fMRI activity which means it measures which part is active and measures the blood flow into the brain now they need a feature decoder but because we want to have these features correspond to features in a neural network the neural network here is VGG there is a bunch of layers and so on. So we have to put the image right into the neural network and then observe its features in the neural network and then we have to put the same image through the human and then we observe the fMRI features.

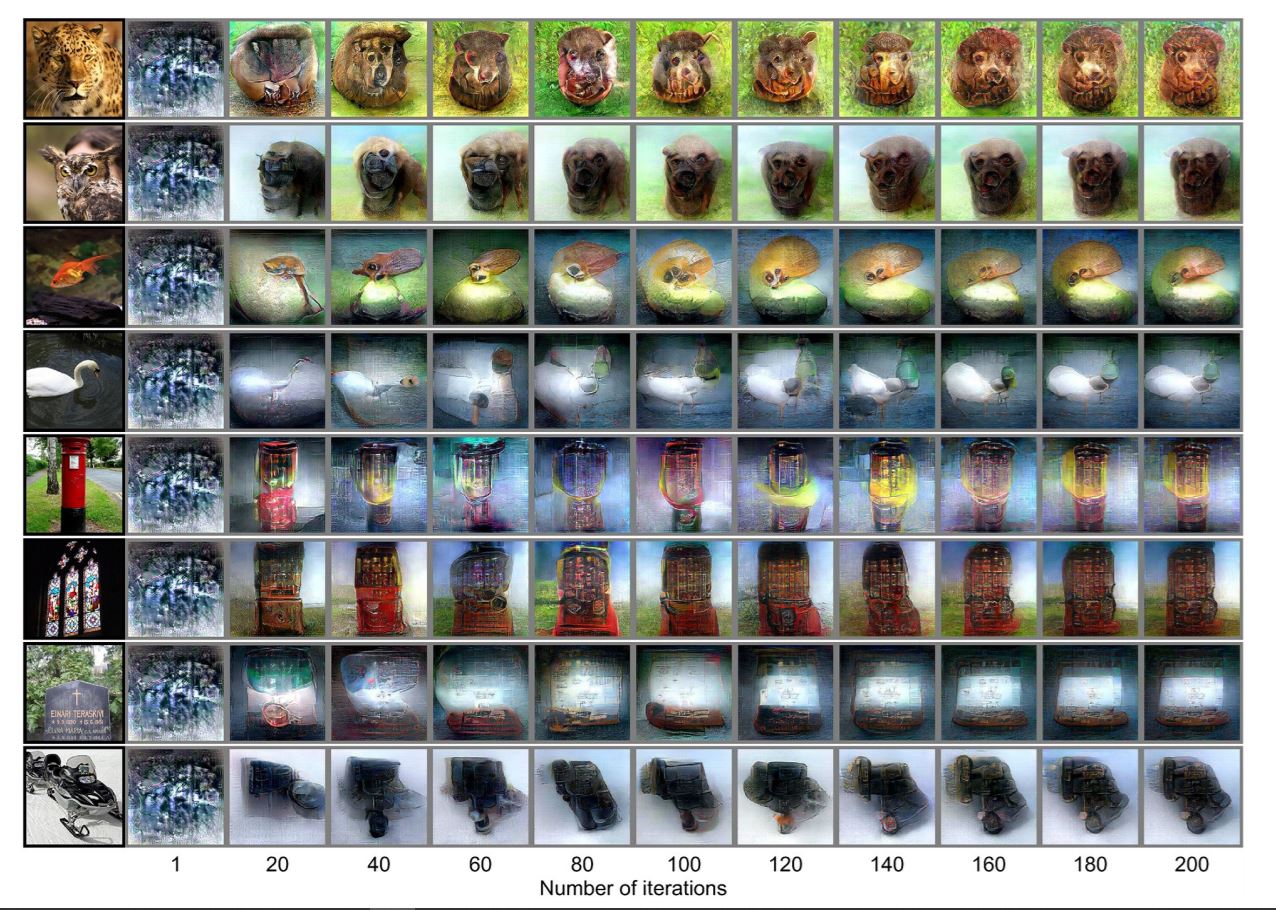

Results

So after taking all the efforts to reconstruct human visualization the results are shown below

This shows that though they had fMRI data of the human brain the model did not produce the result better to recognize at looking once. Though humans were able to predict the original image from the model prediction with an accuracy of 95+% the task for the model did not fit well.